DPCB: Data Processing Center of Barcelona

DPCB is embedded in the Gaia DPAC group at the University of Barcelona (IEEC-UB), in close cooperation with the Barcelona Supercomputing Centre (BSC) and the Consorci de Serveis Universitaris de Catalunya (CSUC), in Barcelona, Spain.

The DPCB hardware used in Gaia operations is located at the BSC (specifically the MareNostrum supercomputer), whereas the team at the IEEC-UB carry out the management, operations, development and tests.

DPCB runs the following systems during operations:

- Intermediate Data Updating (IDU) from CU3, mainly composed of IDU-XM (cross-match), IDU-CAL (calibrations) and IDU-IPD (the main process, determining the Image Parameters).

- DpcbTools from DPCB, including the DPCB Data Manager (DDM) and DPCB Monitoring tools (DMON).

- Gaia Transfer System (GTS), including Aspera and DTSTool from CU1, for the data transfers from/to DPCE.

DPCB is part of the DPAC cyclic processing and runs the several stages of IDU (XM, CAL and IPD) every cycle. Depending on the inputs available, particularly at the beginning of operations, only some subsystems might run. On later stages of the mission, repeated executions of these subsystems may be needed during a given cycle.

Data reception and arrangement runs on a daily basis over the data sent continuously by DPCE.

DPCB overall processing aims to provide an updated cross-match table (using the latest attitude and source catalogue available), updated calibrations (for bias, background and instrument response in form of a LSF), and updated image parameters using such calibrations. All this will improve the results of all the other DPAC systems (mainly AGIS and PhotPipe) as they will use the updated data leading to better results.

DPCB also participates actively in the definition and design of the overall DPAC strategy for the cyclic activities involved in the Gaia data reduction.

Finally, another important role of DPCB has been the generation of CU2 simulation datasets for development and testing of the whole DPAC products. CU2 simulations have been essential prior to Gaia launch to test DPAC daily processing software and will still be used, even after launch, to test the cyclic processing chains. Currently simulations are still being generated for the CU9 software validation and testing. These simulations are essential for the preparation for the first Gaia catalogue release.

DPCB Hardware

MareNostrum:

One of the most powerful supercomputers in Europe is in charge of running IDU during its operational phase. The last BSC upgrade, MareNostrum III, is composed of 3028 computing nodes, each with 16 cores of Intel SandyBridge-EP E5-2670 processors (2.6 Ghz), 32 GB of RAM and 500 GB local disk. They are interconnected using a point–to–point fiber optic network (Infiniband 10Gb).

The central file system of MareNostrum, build upon IBM GPFS, is composed of more than 20 storage servers which provide a total capacity of 1.9 PB, offered globally to all the nodes and providing a parallel access through 10Gb Ethernet. Besides, a long-term storage is available, offering about 5 PB, which will be used for long-term storage of IDU input data (Raw DB) and also to store the final output data from IDU.

DPCB also uses a virtual server provided by BSC to allow some specific tasks to run, mainly related to data transfers and arrangements.

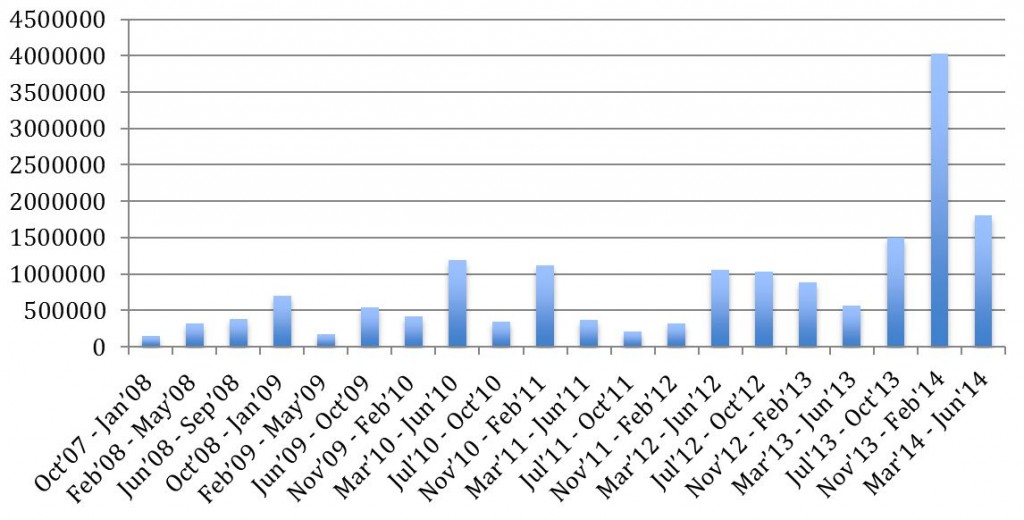

Consumed CPU Hours at BSC

CESCA/CSUC Hardware:

Xeon 8–core cluster (Prades):

It has 45 nodes, each with 2 quad-core Intel Xeon processors. 29 of the nodes have Xeon E5472 at 3 GHz and 32 GB RAM, while the other 16 have Xeon X5550 at 2.66 GHz (identical to those used for IDT operations at DPCE during the first months of the mission) and 48 GB RAM. The total processing performance is 2.68 TFLOP.

Altix UV cluster (Pirineus):

This is a shared-memory system with 1344 Intel Xeon X7542 cores (2.66 GHz) and 6056 GB RAM, offering about 14 TFLOP. It is used for GOG simulations for CU9 and specific scientific simulations related to Gaia.

Consumed CPU Hours at CSUC

DPCB Software

DPCB also develops software within DPAC, specifically DpcbTools. This product includes a set of tools and framework developed to allow DPAC applications to make best use of the hardware available at DPCB (especially on BSC hardware). The main features provided by this product are:

- Data Access Layer (DAL), servers and caches, specially developed for each of the repositories of DPCB-BSC, namely: a local repository for each node, remote repositories from other nodes, one global repository for all the nodes (GPFS), and a backup or permanent repository. The DAL looks for the most efficient usage of them, especially considering the I/O requirements of CU3-IDU. The data servers and caches will efficiently manage the access to certain data that several processes may need. Each node will have a cache for some data, while other data will be stored at caches or servers shared between several nodes.

- Node and task management tools, for determining and launching the tasks needed for the processing of some given data, and also to manage such tasks and the nodes where they are being executed.

- DPCB Data Manager (DDM), main interface between GTS and the DPCB storage resources. This software is in charge of handling the received data from DPCE, perform data backups and manage data transfers to DPCE.

- DPCB Monitoring Tools (DMON), also developed with BSC hardware in mind but applicable to CESCA/CSUC and other DPCs as well.

DPCB quality assurance and operational readiness activities

DPCB has participated in several activities and test campaigns prior to operations to fully test the Data Processing Center (DPC).

Some of the preparation activities carried out are:

- Testing data reception from DPCE at DPCB in the Two-by-Two stage 1 testing campaign from November 2010 to January 2011.

- End-to-End Stage 2 tests, to test the reception and handling of data from DPCE (June-December 2011).

- Two-by-two Stage 2 tests, testing the return of DPCB data to DPCE (November 2011 to January 2012).

- End-to-End Stage 3 tests, testing the execution of IDU at DPCB and return of data to DPCE (first half of 2012).

- Operations rehearsal 1, focused on the commissioning phase and thus just receiving and arranging the data received from DPCE. (June 2012)

- Operations rehearsal 2, focused on the commissioning phase and thus just receiving and arranging the data received from DPCE. (December 2012)

- Operations rehearsal 3, focused on the commissioning phase and thus just receiving and arranging the data received from DPCE. (April 2013)

- Operations rehearsal 4, focused on the nominal phase, just receiving and arranging the data received from DPCE. (September 2013)

DPCB participates in all the validation and qualification activities defined in the DPAC Operations Validation Plan, including rehearsals and challenges, as well as preliminary data processing tests with the very first data received from the spacecraft during commissioning.

DPCB relevant milestones over the last years

2009:

- GASS and GOG cycle 6 version datasets and release integrated into DPCB

- IDT and IDU tests for software release in developments cycles 6 and 7

2010:

- Web deployment of IDT scientific results obtained with IDV at DPCB-CESCA

- Web monitoring of IDT progress at DPCB-CESCA

- DPCB Local Repository for IDU with Ingestor from ESAC through GTS

- Generation of the first dataset for end-to-end DPAC software tests (6 months of simulated Gaia data) and second dataset for end-to-end DPAC software tests (12 months of data)

- GASS simulator release with optimized I/O developed with the help of DPCB integrated and tested

- Integration and testing of IDT and IDU for cycle 8 software versions

- DPCB two-by-two tests with DPCE.

2011:

- Full-scale high-density 24h GASS simulation generated

- Tests with IDT using the full scale data at DPCB-CESCA

- GTS 2by2-Stage2 tests passed successfully

- IDU First complete version of Data Arrangers (including jobs creation) tested at DPCB

- First version of MPJ-based data caches included in DpcbTools tested

2012:

- DPCB-BSC Review and decision of final system architecture for IDU

- IDU executed successfully for the end-to-end Stage3 tests

- IDU performance testing campaign and resources estimations

- Started development and test of HDF5 specific file format for DPCB

- Developments on the DpcbTools software and GTS integration

2013:

- MareNostrum upgraded. Software updated to run on the new machine

- DPCB server at BSC upgraded for better performance, more disk space and better reliability before entering operations

- DpcbTools and GTS Operational readiness tests for DPCE-to-DPCB data transfers

- Generation of GASS datasets for operational readiness tests of critical daily systems

2014:

- Large-scale GASS simulation for DPAC systems volume testing

- Complete mission catalogue simulations with GOG for CU9 tests

- Generation of GASS datasets for IDU and AGIS operational readiness tests. This includes two 5-years simulations

- Large IDU-XM test focused on framework performance using 5-years of simulated data

- DpcbTools release with complete functionality for nominal operations and optimized for best performance

- Operations Rehearsal for cyclic software

- IDU tests on real Gaia data

2015:

- First IDU execution in operations